I’ve been wanting to learn Kubernetes for a while and I suppose I could have just installed minikube on my laptop, but where is the thrill in that? I want an actual cluster with multiple bare metal nodes. I was inspired by some of the Raspberry Pi projects I saw online, but I’m too cheap to go out and buy 6 Raspberry Pis. Instead, I scored 6 Dell Wyse 5060 Thin Clients on eBay for a little over $30 each. These babies sport an AMD steppe Eagle GX-424 2.4 GHz Quad Core processor, so that’s 24 cores to work with. Awww yeah. cpubenchmark.net gives these an average CPU mark of 1761, as compared to the Raspberry Pi’s 734. My units also came with 4GB RAM and a 16GB SSD. The power consumption is 7.8 watts at idle, which is a bit high compared to the Raspberry Pi’s minuscule 2.875 watts, but still not bad. For reference, an iMac 27″ consumes about 40 watts at idle.

K3S is the obvious choice for lightweight hardware like this. There are a number of ways to set it up, and I could have certainly just installed Linux on each one from a USB stick and then logged in and installed K3S, but we are living in the era of infrastructure as code, so this deployment had to be automated!

I decided to run Flatcar Linux, which is a fork of Red Hat’s CoreOS. Flatcar is a lightweight “container Linux” which is “designed from the ground up for running container workloads. It fully embraces the container paradigm, including only what is required to run containers.”1

I relied heavily on Janakiram MSV’s excellent tutorial: https://thenewstack.io/tutorial-install-flatcar-container-linux-on-remote-bare-metal-servers/

Along with the 6 nodes, I would need a provisioning server to support PXE booting the nodes and applying a separate image to each one. My goal was a zero touch deployment, but I did end up having to do a little bit of touching here and there. For one thing, I had to change the boot order in each client’s BIOS settings to put PXE boot ahead of the SSD. This was easier than erasing ThinOS from the drives.

Broadly, the deployment of a node follows this process:

- The node boots in PXE mode and makes a DHCPREQUEST.

- The DHCP Server assigns a static IP address based on the node’s MAC address.

- PXE boot continues with a custom PXE file based on IP address.

- The PXE boot file specifies an HTTP hosted ignition file, customized for that node. (Ignition is similar to cloudinit, with some advantages for this type of deployment).

- The first ignition file checks to see if this is the first boot, and if it is, handles the OS installation to disk and specifies a second ignition file for subsequent boots.

- We turn off the PXE server and manually reboot the node and upon second boot, the first ignition file is ignored (because it’s not the first boot anymore) and the second ignition file runs.

- The second ignition file checks to make sure K3S is not already installed, and if it’s not, installs K3s and joins the node to the cluster.

Network Environment

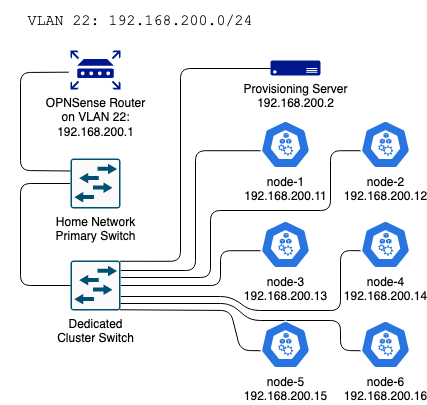

The cluster is connected to a separate switch and lives on its own VLAN with the subnet 192.168.200.0/24. My gateway router is an OPNSense box, so I created the VLAN there, trunked it to the switchport that’s connected to the cluster switch, and disabled DHCP for the segment in the router settings, since I would be running DHCP service on the provisioning server.

Provisioning Server

I installed and Ubuntu Server 22.04.1 on the provisioning server and applied updates as well as the necessary packages:

sudo apt-get install isc-dhcp-server tftpd-hpa tftp syslinux pxelinux initramfs-tools apache2 I assigned the server a static IP of 192.168.200.2.

Additionally, each node needs a static IP configured in /etc/dhcp/dhcpd.conf

Also in dhcpd.conf, note the bootp configuration section and where the PXE Boot directory location is specified.

default-lease-time 600;

max-lease-time 7200;

#bootp configuration

allow booting;

allow bootp;

subnet 192.168.200.0 netmask 255.255.255.0 {

range 192.168.200.100 192.168.200.245;

option broadcast-address 192.168.200.255;

option routers 192.168.200.1;

option domain-name "<your domain here>";

option domain-name-servers 8.8.8.8, 1.1.1.1;

group {

host node-1 {

hardware ethernet 84:7b:eb:ef:7d:41;

fixed-address 192.168.200.11;

}

host node-2 {

hardware ethernet 84:7b:eb:ef:7c:7e;

fixed-address 192.168.200.12;

}

host node-3 {

hardware ethernet 84:7b:eb:ef:64:9d;

fixed-address 192.168.200.13;

}

host node-4 {

hardware ethernet 84:7b:eb:f1:ea:4c;

fixed-address 192.168.200.14;

}

host node-5 {

hardware ethernet 84:7b:eb:ef:62:76;

fixed-address 192.168.200.15;

host node-6 {

hardware ethernet d8:9e:f3:e3:fb:aa;

fixed-address 192.168.200.16;

}

}

filename "/pxelinux.0";

}

I was not about to squint at a tiny label and manually type the MAC addresses, so I connected and powered on each one and ran

systemctl status isc-dhcp-serverThe MAC addresses can be copied and pasted from the results:

Feb 09 01:52:54 pixie dhcpd[2799]: DHCPREQUEST for 192.168.200.11 from 84:7b:eb:ef:7d:41 via enp2s0

Feb 09 01:52:54 pixie dhcpd[2799]: DHCPACK on 192.168.200.11 to 84:7b:eb:ef:7d:41 via enp2s0Next, I configured the tftp server by editing /etc/default/tftpd-hpa

RUN_DAEMON="yes"

TFTP_USERNAME="tftp"

TFTP_DIRECTORY="/tftpboot"

TFTP_ADDRESS=":69"

TFTP_OPTIONS="--secure"Then to create the necessary directories, copy over the boot files, and set the permissions:

sudo mkdir -p /tftpboot/pxelinux.cfg

sudo cp /usr/lib/PXELINUX/pxelinux.0 /tftpboot/

sudo mkdir -p /tftpboot/boot

sudo cp -r /usr/lib/syslinux/modules/bios /tftpboot/boot/isolinux

sudo chmod -R 777 /tftpbootDownload flatcar images to /tftpboot/ and verify:

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar9_production_pxe.vmlinuz

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar_production_pxe.vmlinuz

ls

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar_production_pxe.vmlinuz.sig

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar_production_pxe.cpio.gz

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar_production_pxe_image.cpio.gz

wget https://stable.release.flatcar-linux.net/amd64-usr/current/flatcar_production_pxe_image.cpio.gz.sig

gpg --verify flatcar_production_pxe.vmlinuz.sig

gpg --verify flatcar_production_pxe_image.cpio.gz.sigNext, I created a PXE boot file for each node. In order to be associated with that node, the filename should be the node’s IP address in hexidecimal form. For exmaple, here is my node-1 PXE boot file which will be applied to the client with IP 192.168.200.11. BTW, the hexidecimal filename must be in ALL CAPS. I killed a little time figuring that out:

#/tftpboot/pxelinux.cfg/C0A8C80B

default flatcar

prompt 1

timeout 15

display boot.msg

label flatcar

menu default

kernel flatcar_production_pxe.vmlinuz

initrd flatcar_production_pxe_image.cpio.gz

append flatcar.first_boot=1 ignition.config.url=http://192.168.200.2/node-1-ignite.ignNotice how the PXE boot file hands off the boot process to the node-1 ignition file on the last line. So now we need to start apache2 and serve up the ignition files for the next stage of deployment. I highly recommend reading about ignition here: https://flatcar.org/docs/latest/provisioning/ignition/

We want our installation ignition file to run on the first boot, and then the “ignite-boot” file for any subsequent boots. To identify the first boot, Ignition relies on GRUB to check for the presence of a file at flatcar/first_boot but PXE clients don’t use GRUB, so we have to appended first_boot as a boot argument in the PXE file. The first ignition file handles OS installation, then after a reboot, the OS will look to a second ignition file for configuration and services (K3s, in our case). The ignition files are written in yaml, and then transpiled into JSON format (more on that later). Here is my node-1 ignition file in yaml form. An ssh public key is added here for remote login:

systemd:

units:

- name: installer.service

enable: true

contents: |

[Unit]

Requires=network-online.target

After=network-online.target

[Service]

Type=forking

TimeoutStartSec=600

RemainAfterExit=yes

ExecStart=/usr/bin/sh -c "flatcar-install -d /dev/sda -i /opt/ignition.json && udevadm settle && systemtcl reboot"

[Install]

WantedBy=multi-user.target

passwd:

users:

- name: core

ssh_authorized_keys:

- ssh-rsa ABCDEFGHIJKLMNOP1234567890!@#$%^&*(){}

storage:

files:

- path: /opt/ignition.json

filesystem: root

mode: 777

contents:

remote:

url: http://192.168.200.2/node-1-ignite-boot.ignNote the handoff at the end to the next ignition file, node-1-ignite-boot.ign. That file sets the hostname and deploys K3s. Again, this is the human-readable yaml form which will be transpiled to JSON before serving:

systemd:

units:

- name: k3s-install.service

enabled: true

contents: |

[Unit]

Description=Run K3s script

Wants = network-online.target

After = network.target network-online.target

ConditionPathExists=/opt/k3s-install.sh

ConditionPathExists=!/opt/bin/k3s

[Service]

Type=forking

TimeoutStartSec=180

RemainAfterExit=yes

KillMode=process

Environment="K3S_TOKEN=secret_edgecluster_token"

Environment="INSTALL_K3S_EXEC=--cluster-init"

ExecStart=/usr/bin/sh -c "/opt/k3s-install.sh"

[Install]

WantedBy=multi-user.target

passwd:

users:

- name: core

ssh_authorized_keys:

- ssh-rsa ABCDEFGHIJKLMNOP1234567890!@#$%^&*(){}

storage:

files:

- path: /etc/hostname

filesystem: root

mode: 0644

contents:

inline: node-1

- path: /opt/k3s-install.sh

filesystem: root

mode: 777

contents:

remote:

url: https://get.k3s.ioNote the Environment variable which is set for node-1 as "INSTALL_K3S_EXEC=--cluster-init"

For subsequent nodes, the Environment variable will be set to "INSTALL_K3S_EXEC='--server' 'https://192.168.200.11:6443'"

Next, the ignition files are transpiled to JSON format and placed in the /var/www/html directory. The tool to use is container-linux-config-transpiler which seems to do some special formatting, so this tool should definitely be used rather than another yaml to JSON converter.

Booting the Nodes

Once the provisioning server was configured with 6 custom PXE boot files, 6 node-x-ignite.ign files, and 6 node-x-ignite-boot.ign files, it was time to boot the nodes! I booted each one in succession and once they were all responding to pings, I shut down the tftp service to prevent PXE booting on any subsequent reboots. Then I ssh’d into each node (note that the hostname is “localhost” becasue we haven’t run our second ignition file yet) and rebooted. Upon the second boot, nodes get their hostname and K3S downloads and installs! I logged into node-1 which now greets me with its proper hostname and checked the list of nodes:

core@node-1 ~ $ sudo k3s kubectl get nodes

NAME STATUS ROLES

node-1 Ready control-plane,etcd,master

node-2 Ready control-plane,etcd,master

node-3 Ready control-plane,etcd,master

node-4 Ready control-plane,etcd,master

node-5 Ready control-plane,etcd,master

node-6 Ready control-plane,etcd,masterSix nodes, ready to rock!

Conclusion

Overall, I’m pleased with the outcome of this project. I was able to automatically provision a bare metal kubernetes cluster on cheap, low-power hardware which will be easy to maintain and upgrade over time. If I want to add another node, I can simply give it a static IP address and clone a few files before attaching it to the network and booting up. I look forwarding to digging in deeper into kubernetes topics and deploying some workloads to the cluster.